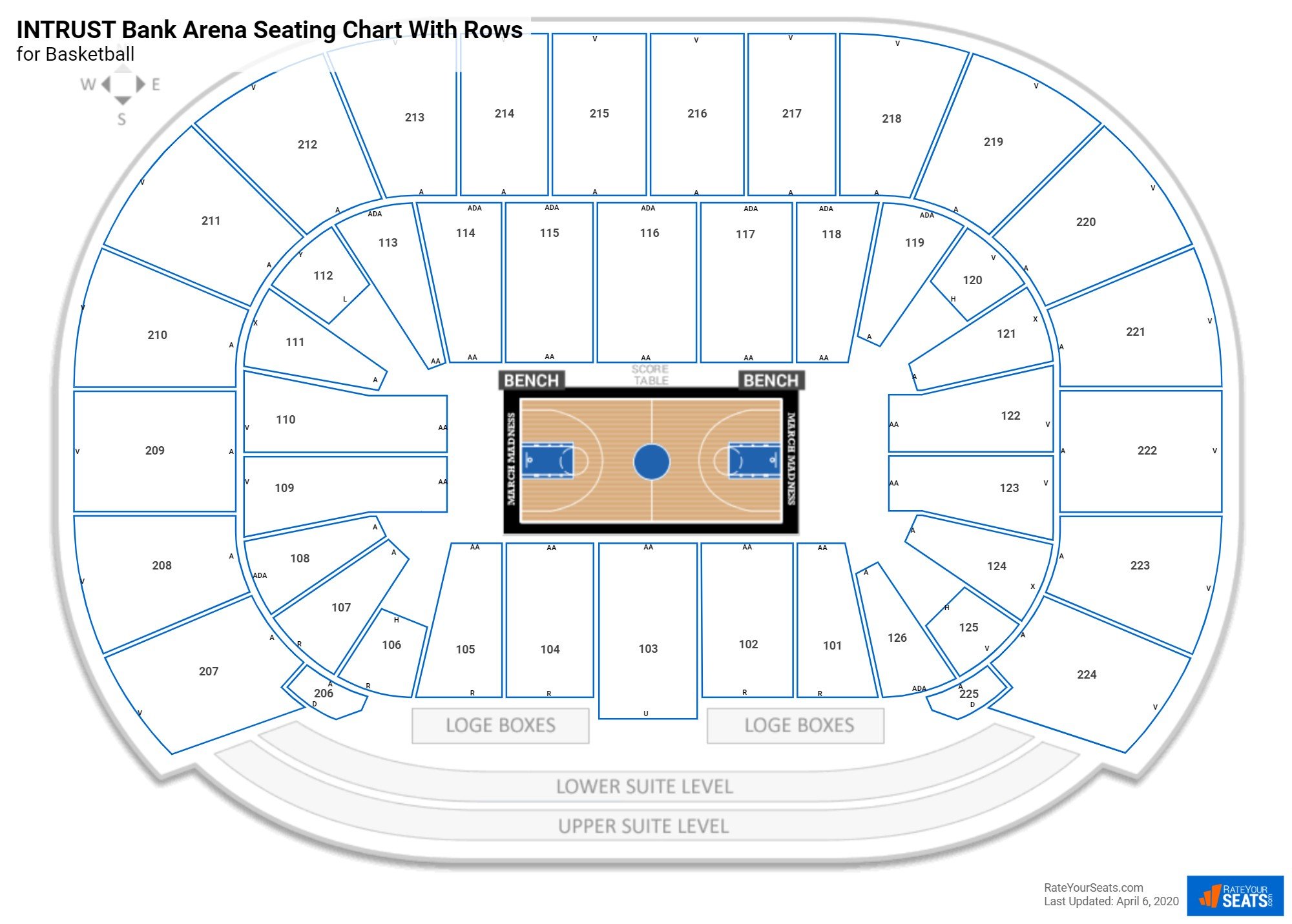

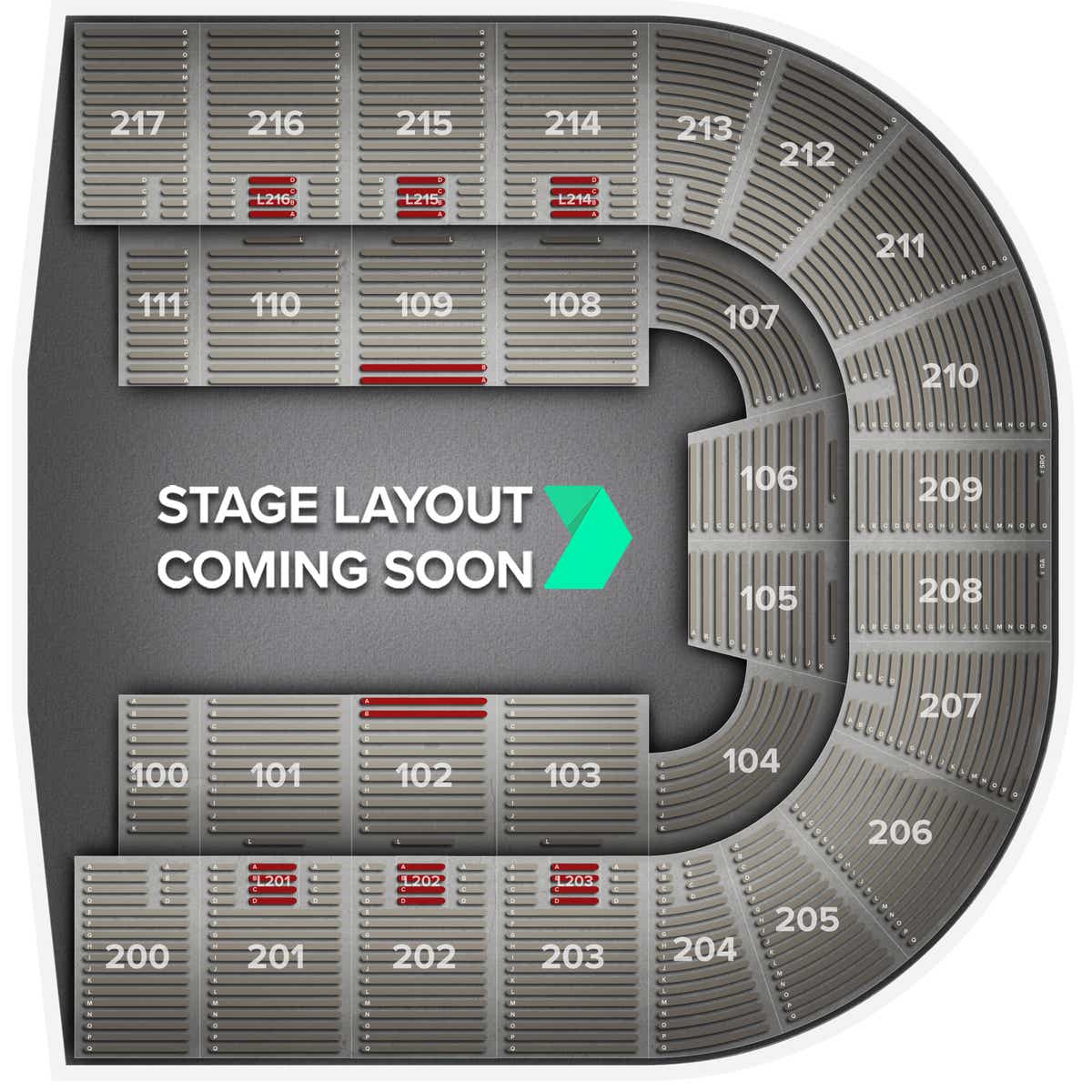

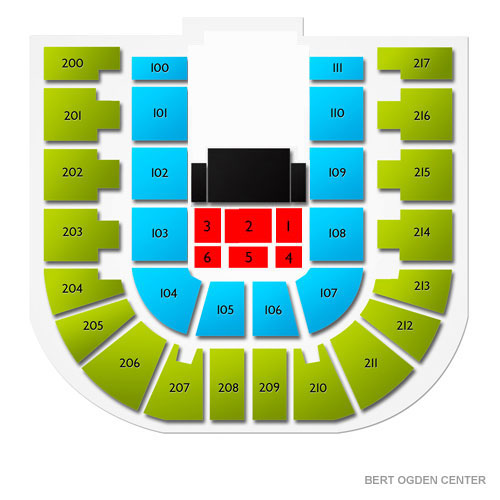

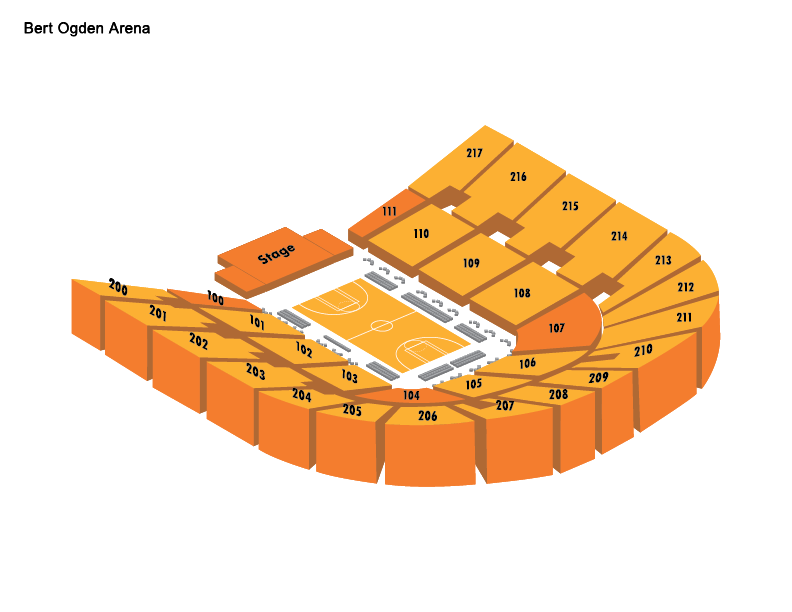

Bert Ogden Arena Seating Chart

Bert Ogden Arena Seating Chart - In the following, we’ll explore bert models from the ground up — understanding what they are, how they work, and most importantly, how to use them practically in your projects. We introduce a new language representation model called bert, which stands for bidirectional encoder representations from transformers. Bert language model is an open source machine learning framework for natural language processing (nlp). Bert is designed to help computers understand the meaning of. [1][2] it learns to represent text as a sequence of. Bert is an open source machine learning framework for natural language processing (nlp) that helps computers understand ambiguous language by using context. Bidirectional encoder representations from transformers (bert) is a language model introduced in october 2018 by researchers at google. Instead of reading sentences in just one direction, it reads them both ways, making sense of context. Bert is a bidirectional transformer pretrained on unlabeled text to predict masked tokens in a sentence and to predict whether one sentence follows another. The main idea is that by. We introduce a new language representation model called bert, which stands for bidirectional encoder representations from transformers. Bert language model is an open source machine learning framework for natural language processing (nlp). In the following, we’ll explore bert models from the ground up — understanding what they are, how they work, and most importantly, how to use them practically in your projects. Bidirectional encoder representations from transformers (bert) is a language model introduced in october 2018 by researchers at google. Bert is an open source machine learning framework for natural language processing (nlp) that helps computers understand ambiguous language by using context. [1][2] it learns to represent text as a sequence of. The main idea is that by. Bert is designed to help computers understand the meaning of. Instead of reading sentences in just one direction, it reads them both ways, making sense of context. Bert is a bidirectional transformer pretrained on unlabeled text to predict masked tokens in a sentence and to predict whether one sentence follows another. In the following, we’ll explore bert models from the ground up — understanding what they are, how they work, and most importantly, how to use them practically in your projects. Bert is a bidirectional transformer pretrained on unlabeled text to predict masked tokens in a sentence and to predict whether one sentence follows another. Bert is designed to help computers. The main idea is that by. We introduce a new language representation model called bert, which stands for bidirectional encoder representations from transformers. Bidirectional encoder representations from transformers (bert) is a language model introduced in october 2018 by researchers at google. Bert is a bidirectional transformer pretrained on unlabeled text to predict masked tokens in a sentence and to predict. Bert is designed to help computers understand the meaning of. The main idea is that by. Bert language model is an open source machine learning framework for natural language processing (nlp). We introduce a new language representation model called bert, which stands for bidirectional encoder representations from transformers. Bidirectional encoder representations from transformers (bert) is a language model introduced in. The main idea is that by. Instead of reading sentences in just one direction, it reads them both ways, making sense of context. Bidirectional encoder representations from transformers (bert) is a language model introduced in october 2018 by researchers at google. Bert language model is an open source machine learning framework for natural language processing (nlp). [1][2] it learns to. Bidirectional encoder representations from transformers (bert) is a language model introduced in october 2018 by researchers at google. Bert language model is an open source machine learning framework for natural language processing (nlp). We introduce a new language representation model called bert, which stands for bidirectional encoder representations from transformers. The main idea is that by. In the following, we’ll. We introduce a new language representation model called bert, which stands for bidirectional encoder representations from transformers. The main idea is that by. Bert is designed to help computers understand the meaning of. Bidirectional encoder representations from transformers (bert) is a language model introduced in october 2018 by researchers at google. Bert is an open source machine learning framework for. Bert is designed to help computers understand the meaning of. Bert is a bidirectional transformer pretrained on unlabeled text to predict masked tokens in a sentence and to predict whether one sentence follows another. Bert language model is an open source machine learning framework for natural language processing (nlp). In the following, we’ll explore bert models from the ground up. [1][2] it learns to represent text as a sequence of. Bert is a bidirectional transformer pretrained on unlabeled text to predict masked tokens in a sentence and to predict whether one sentence follows another. Bert is designed to help computers understand the meaning of. We introduce a new language representation model called bert, which stands for bidirectional encoder representations from. Bert is an open source machine learning framework for natural language processing (nlp) that helps computers understand ambiguous language by using context. The main idea is that by. Bidirectional encoder representations from transformers (bert) is a language model introduced in october 2018 by researchers at google. Bert is designed to help computers understand the meaning of. Bert is a bidirectional. Instead of reading sentences in just one direction, it reads them both ways, making sense of context. Bert is designed to help computers understand the meaning of. [1][2] it learns to represent text as a sequence of. Bert is an open source machine learning framework for natural language processing (nlp) that helps computers understand ambiguous language by using context. Bert. [1][2] it learns to represent text as a sequence of. We introduce a new language representation model called bert, which stands for bidirectional encoder representations from transformers. Bidirectional encoder representations from transformers (bert) is a language model introduced in october 2018 by researchers at google. Bert is designed to help computers understand the meaning of. Bert is a bidirectional transformer pretrained on unlabeled text to predict masked tokens in a sentence and to predict whether one sentence follows another. In the following, we’ll explore bert models from the ground up — understanding what they are, how they work, and most importantly, how to use them practically in your projects. The main idea is that by. Instead of reading sentences in just one direction, it reads them both ways, making sense of context.Bert Ogden Arena Seating Chart For Basketball

Bert Ogden Arena Tickets & Events Gametime

Bert Ogden Arena Seating Chart

Arena Map Bert Ogden Arena

Bert Ogden Arena Seating Chart Behance

General Assembly Communication Info Edinburg Consolidated Independent School District

Bert Ogden Arena Seating Chart

Bert Ogden Arena Seating Chart Portal.posgradount.edu.pe

Bert Ogden Arena Tickets, Seating Charts and Schedule in Edinburg TX at StubPass!

Bert Ogden Arena Seating Chart Behance

Bert Language Model Is An Open Source Machine Learning Framework For Natural Language Processing (Nlp).

Bert Is An Open Source Machine Learning Framework For Natural Language Processing (Nlp) That Helps Computers Understand Ambiguous Language By Using Context.

Related Post: