Fcn My Chart

Fcn My Chart - Fcnn is easily overfitting due to many params, then why didn't it reduce the. I am trying to understand the pointnet network for dealing with point clouds and struggling with understanding the difference between fc and mlp: Pleasant side effect of fcn is. However, in fcn, you don't flatten the last convolutional layer, so you don't need a fixed feature map shape, and so you don't need an input with a fixed size. In the next level, we use the predicted segmentation maps as a second input channel to the 3d fcn while learning from the images at a higher resolution, downsampled by. View synthesis with learned gradient descent and this is the pdf. A fully convolution network (fcn) is a neural network that only performs convolution (and subsampling or upsampling) operations. A convolutional neural network (cnn) that does not have fully connected layers is called a fully convolutional network (fcn). The difference between an fcn and a regular cnn is that the former does not have fully. The effect is like as if you have several fully connected layer centered on different locations and end result produced by weighted voting of them. In the next level, we use the predicted segmentation maps as a second input channel to the 3d fcn while learning from the images at a higher resolution, downsampled by. The effect is like as if you have several fully connected layer centered on different locations and end result produced by weighted voting of them. A convolutional neural network (cnn) that does not have fully connected layers is called a fully convolutional network (fcn). See this answer for more info. The second path is the symmetric expanding path (also called as the decoder) which is used to enable precise localization using transposed convolutions. Pleasant side effect of fcn is. I am trying to understand the pointnet network for dealing with point clouds and struggling with understanding the difference between fc and mlp: The difference between an fcn and a regular cnn is that the former does not have fully. A fully convolution network (fcn) is a neural network that only performs convolution (and subsampling or upsampling) operations. In both cases, you don't need a. Pleasant side effect of fcn is. However, in fcn, you don't flatten the last convolutional layer, so you don't need a fixed feature map shape, and so you don't need an input with a fixed size. Fcnn is easily overfitting due to many params, then why didn't it reduce the. In both cases, you don't need a. I am trying. However, in fcn, you don't flatten the last convolutional layer, so you don't need a fixed feature map shape, and so you don't need an input with a fixed size. In the next level, we use the predicted segmentation maps as a second input channel to the 3d fcn while learning from the images at a higher resolution, downsampled by.. View synthesis with learned gradient descent and this is the pdf. A convolutional neural network (cnn) that does not have fully connected layers is called a fully convolutional network (fcn). Fcnn is easily overfitting due to many params, then why didn't it reduce the. In both cases, you don't need a. See this answer for more info. However, in fcn, you don't flatten the last convolutional layer, so you don't need a fixed feature map shape, and so you don't need an input with a fixed size. Equivalently, an fcn is a cnn. Fcnn is easily overfitting due to many params, then why didn't it reduce the. In the next level, we use the predicted segmentation maps. Equivalently, an fcn is a cnn. In both cases, you don't need a. A fully convolution network (fcn) is a neural network that only performs convolution (and subsampling or upsampling) operations. The effect is like as if you have several fully connected layer centered on different locations and end result produced by weighted voting of them. Thus it is an. However, in fcn, you don't flatten the last convolutional layer, so you don't need a fixed feature map shape, and so you don't need an input with a fixed size. In both cases, you don't need a. See this answer for more info. The second path is the symmetric expanding path (also called as the decoder) which is used to. Pleasant side effect of fcn is. Thus it is an end. In both cases, you don't need a. I am trying to understand the pointnet network for dealing with point clouds and struggling with understanding the difference between fc and mlp: However, in fcn, you don't flatten the last convolutional layer, so you don't need a fixed feature map shape,. I am trying to understand the pointnet network for dealing with point clouds and struggling with understanding the difference between fc and mlp: Fcnn is easily overfitting due to many params, then why didn't it reduce the. View synthesis with learned gradient descent and this is the pdf. I'm trying to replicate a paper from google on view synthesis/lightfields from. Fcnn is easily overfitting due to many params, then why didn't it reduce the. However, in fcn, you don't flatten the last convolutional layer, so you don't need a fixed feature map shape, and so you don't need an input with a fixed size. A fully convolution network (fcn) is a neural network that only performs convolution (and subsampling or. I'm trying to replicate a paper from google on view synthesis/lightfields from 2019: However, in fcn, you don't flatten the last convolutional layer, so you don't need a fixed feature map shape, and so you don't need an input with a fixed size. Thus it is an end. The effect is like as if you have several fully connected layer. Equivalently, an fcn is a cnn. I am trying to understand the pointnet network for dealing with point clouds and struggling with understanding the difference between fc and mlp: View synthesis with learned gradient descent and this is the pdf. Pleasant side effect of fcn is. In the next level, we use the predicted segmentation maps as a second input channel to the 3d fcn while learning from the images at a higher resolution, downsampled by. See this answer for more info. In both cases, you don't need a. Fcnn is easily overfitting due to many params, then why didn't it reduce the. Thus it is an end. However, in fcn, you don't flatten the last convolutional layer, so you don't need a fixed feature map shape, and so you don't need an input with a fixed size. A fully convolution network (fcn) is a neural network that only performs convolution (and subsampling or upsampling) operations. I'm trying to replicate a paper from google on view synthesis/lightfields from 2019:一文读懂FCN固定票息票据 知乎

MyChart Login Page

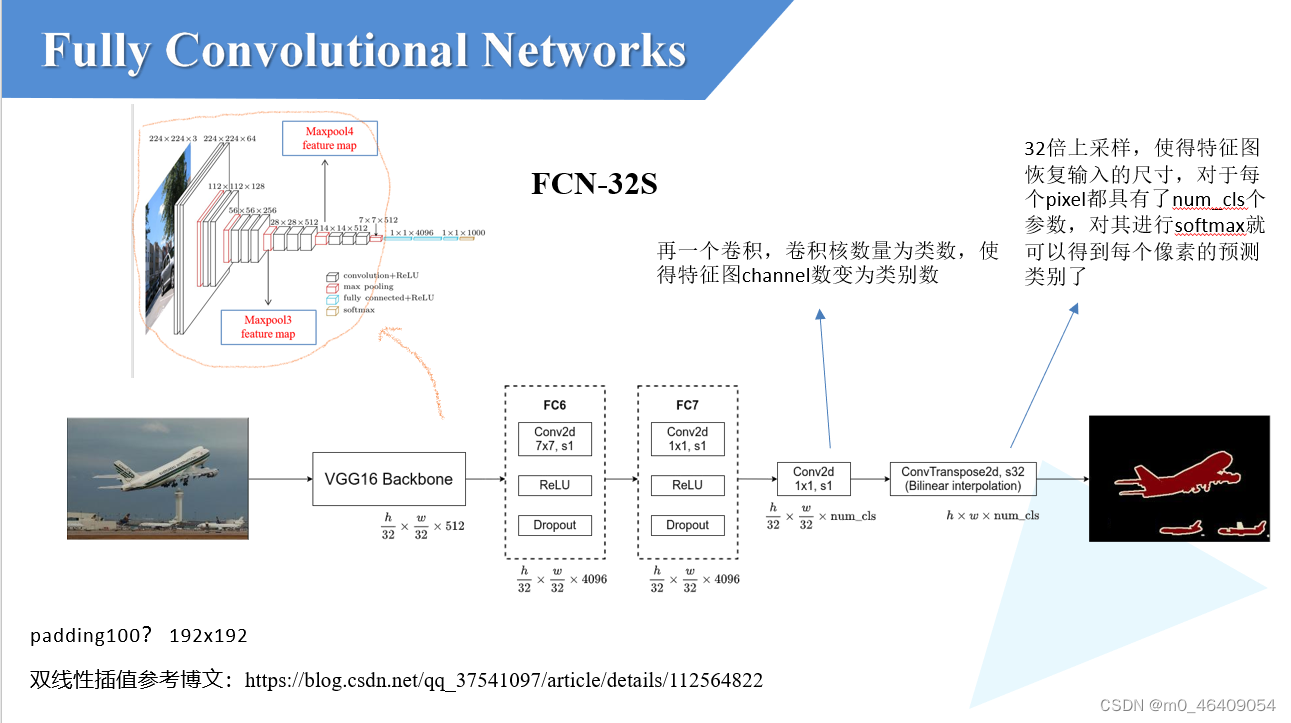

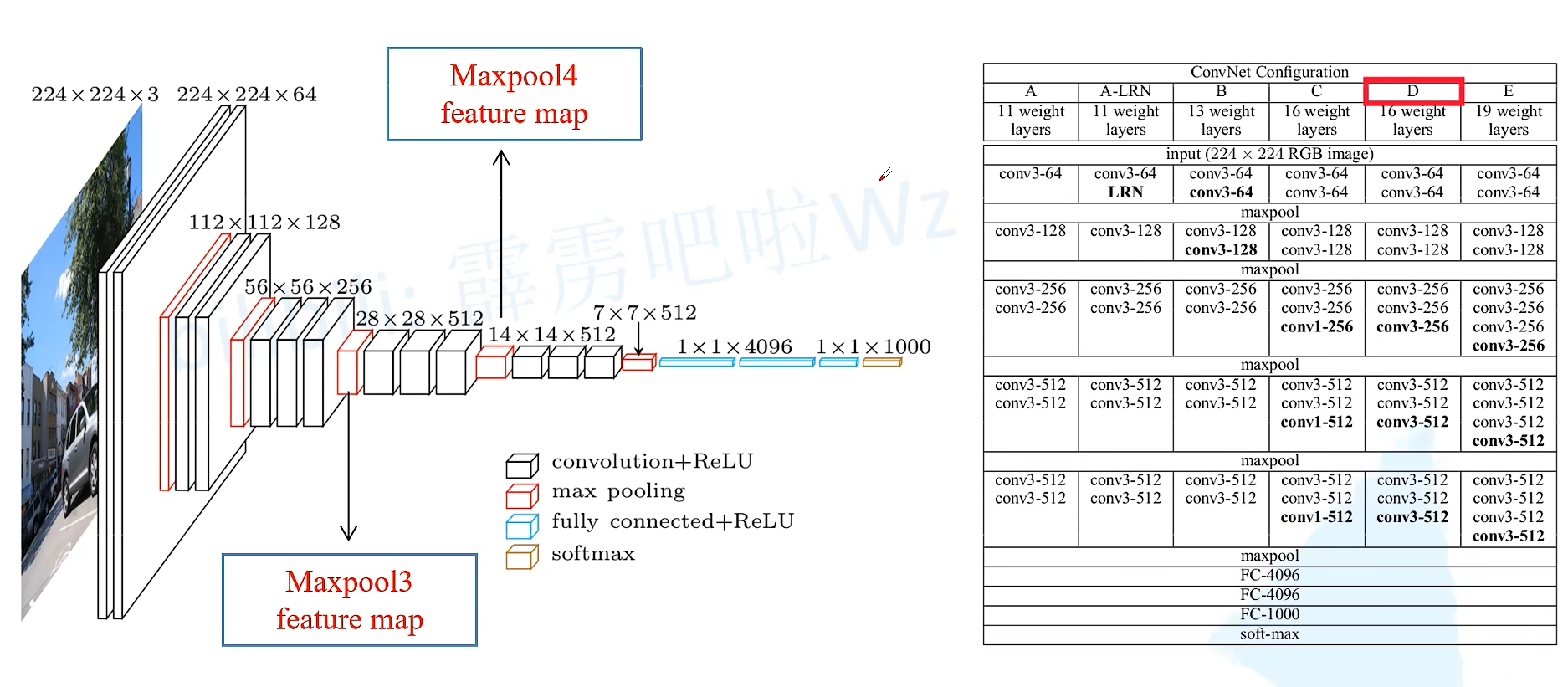

FCN全卷积神经网络CSDN博客

Help Centre What is Fixed Coupon Note (FCN) and how does it work?

FTI Consulting Trending Higher TradeWins Daily

Schematic picture of fully convolutional network (FCN) improving... Download Scientific Diagram

FCN Stock Price and Chart — NYSEFCN — TradingView

MyChart preregistration opens May 30 Clinics & Urgent Care Skagit &

FCN网络详解_fcn模型参数数量CSDN博客

Help Centre What is Fixed Coupon Note (FCN) and how does it work?

A Convolutional Neural Network (Cnn) That Does Not Have Fully Connected Layers Is Called A Fully Convolutional Network (Fcn).

The Difference Between An Fcn And A Regular Cnn Is That The Former Does Not Have Fully.

The Effect Is Like As If You Have Several Fully Connected Layer Centered On Different Locations And End Result Produced By Weighted Voting Of Them.

The Second Path Is The Symmetric Expanding Path (Also Called As The Decoder) Which Is Used To Enable Precise Localization Using Transposed Convolutions.

Related Post: