Shap Charts

Shap Charts - Shap decision plots shap decision plots show how complex models arrive at their predictions (i.e., how models make decisions). Shap (shapley additive explanations) is a game theoretic approach to explain the output of any machine learning model. Image examples these examples explain machine learning models applied to image data. This is the primary explainer interface for the shap library. Text examples these examples explain machine learning models applied to text data. It connects optimal credit allocation with local explanations using the. We start with a simple linear function, and then add an interaction term to see how it changes. They are all generated from jupyter notebooks available on github. This is a living document, and serves as an introduction. This notebook shows how the shap interaction values for a very simple function are computed. Text examples these examples explain machine learning models applied to text data. This notebook illustrates decision plot features and use. This notebook shows how the shap interaction values for a very simple function are computed. They are all generated from jupyter notebooks available on github. Uses shapley values to explain any machine learning model or python function. Shap (shapley additive explanations) is a game theoretic approach to explain the output of any machine learning model. Here we take the keras model trained above and explain why it makes different predictions on individual samples. Image examples these examples explain machine learning models applied to image data. This page contains the api reference for public objects and functions in shap. Topical overviews an introduction to explainable ai with shapley values be careful when interpreting predictive models in search of causal insights explaining. They are all generated from jupyter notebooks available on github. This page contains the api reference for public objects and functions in shap. This notebook shows how the shap interaction values for a very simple function are computed. They are all generated from jupyter notebooks available on github. Set the explainer using the kernel explainer (model agnostic explainer. This notebook shows how the shap interaction values for a very simple function are computed. They are all generated from jupyter notebooks available on github. Uses shapley values to explain any machine learning model or python function. There are also example notebooks available that demonstrate how to use the api of each object/function. This page contains the api reference for. Here we take the keras model trained above and explain why it makes different predictions on individual samples. This page contains the api reference for public objects and functions in shap. This notebook shows how the shap interaction values for a very simple function are computed. Shap (shapley additive explanations) is a game theoretic approach to explain the output of. Here we take the keras model trained above and explain why it makes different predictions on individual samples. This is the primary explainer interface for the shap library. We start with a simple linear function, and then add an interaction term to see how it changes. There are also example notebooks available that demonstrate how to use the api of. There are also example notebooks available that demonstrate how to use the api of each object/function. Shap (shapley additive explanations) is a game theoretic approach to explain the output of any machine learning model. Image examples these examples explain machine learning models applied to image data. This notebook illustrates decision plot features and use. We start with a simple linear. There are also example notebooks available that demonstrate how to use the api of each object/function. It connects optimal credit allocation with local explanations using the. This is the primary explainer interface for the shap library. Topical overviews an introduction to explainable ai with shapley values be careful when interpreting predictive models in search of causal insights explaining. Here we. Here we take the keras model trained above and explain why it makes different predictions on individual samples. It takes any combination of a model and. Uses shapley values to explain any machine learning model or python function. Shap (shapley additive explanations) is a game theoretic approach to explain the output of any machine learning model. Set the explainer using. They are all generated from jupyter notebooks available on github. This notebook illustrates decision plot features and use. This page contains the api reference for public objects and functions in shap. Text examples these examples explain machine learning models applied to text data. Here we take the keras model trained above and explain why it makes different predictions on individual. This notebook illustrates decision plot features and use. It connects optimal credit allocation with local explanations using the. There are also example notebooks available that demonstrate how to use the api of each object/function. We start with a simple linear function, and then add an interaction term to see how it changes. Text examples these examples explain machine learning models. This page contains the api reference for public objects and functions in shap. Topical overviews an introduction to explainable ai with shapley values be careful when interpreting predictive models in search of causal insights explaining. Set the explainer using the kernel explainer (model agnostic explainer. Here we take the keras model trained above and explain why it makes different predictions. This is a living document, and serves as an introduction. Shap decision plots shap decision plots show how complex models arrive at their predictions (i.e., how models make decisions). This page contains the api reference for public objects and functions in shap. Text examples these examples explain machine learning models applied to text data. Image examples these examples explain machine learning models applied to image data. Topical overviews an introduction to explainable ai with shapley values be careful when interpreting predictive models in search of causal insights explaining. This is the primary explainer interface for the shap library. This notebook illustrates decision plot features and use. Here we take the keras model trained above and explain why it makes different predictions on individual samples. It connects optimal credit allocation with local explanations using the. This notebook shows how the shap interaction values for a very simple function are computed. Shap (shapley additive explanations) is a game theoretic approach to explain the output of any machine learning model. They are all generated from jupyter notebooks available on github. There are also example notebooks available that demonstrate how to use the api of each object/function. It takes any combination of a model and.SHAP plots of the XGBoost model. (A) The classified bar charts of the... Download Scientific

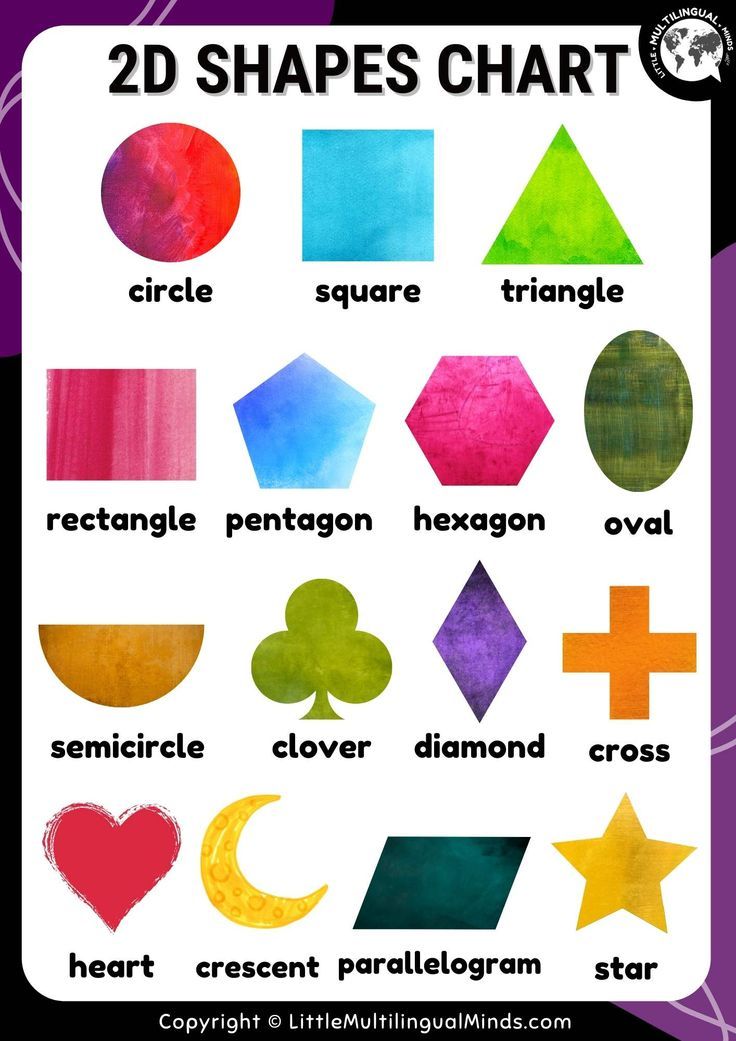

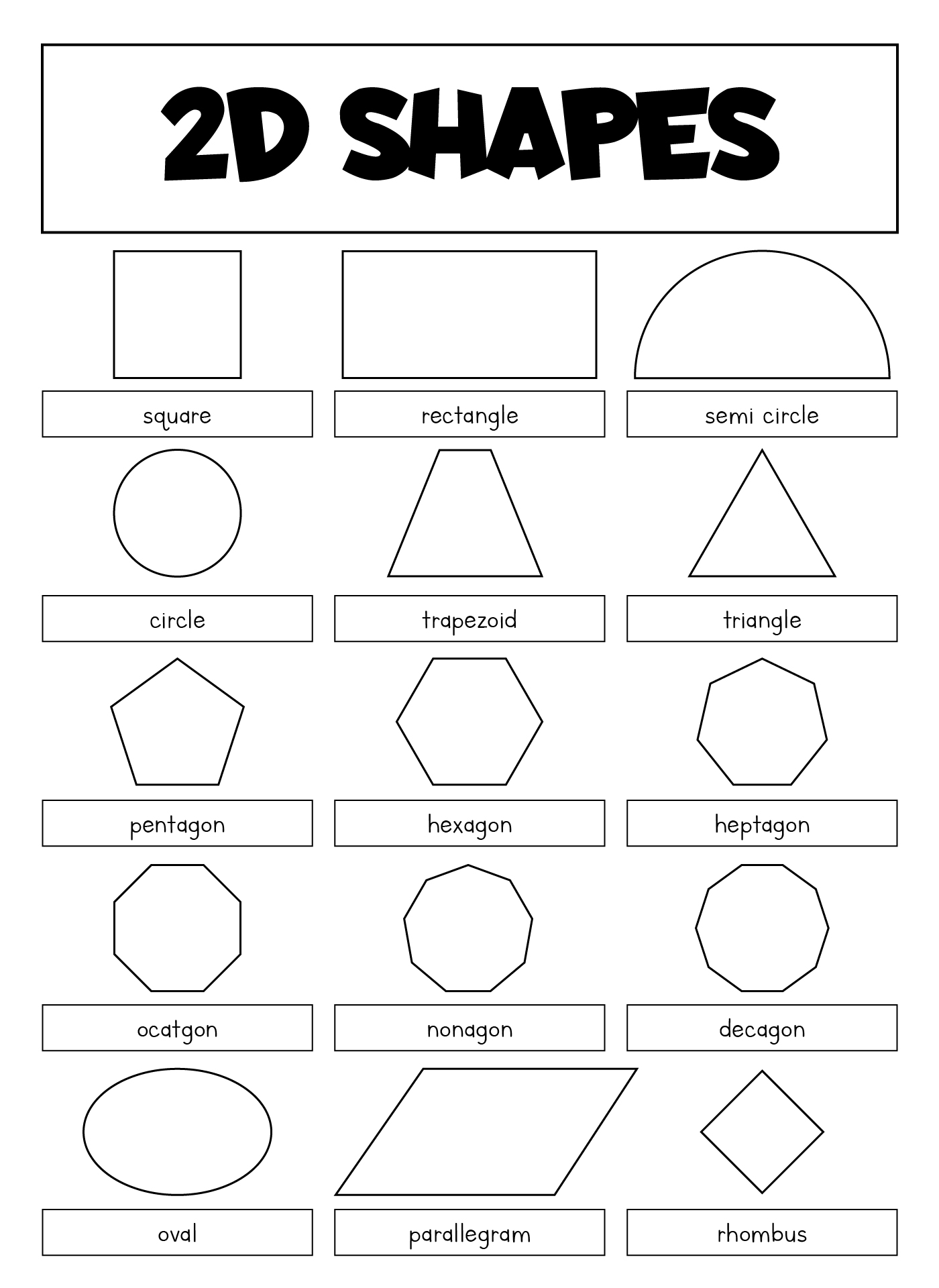

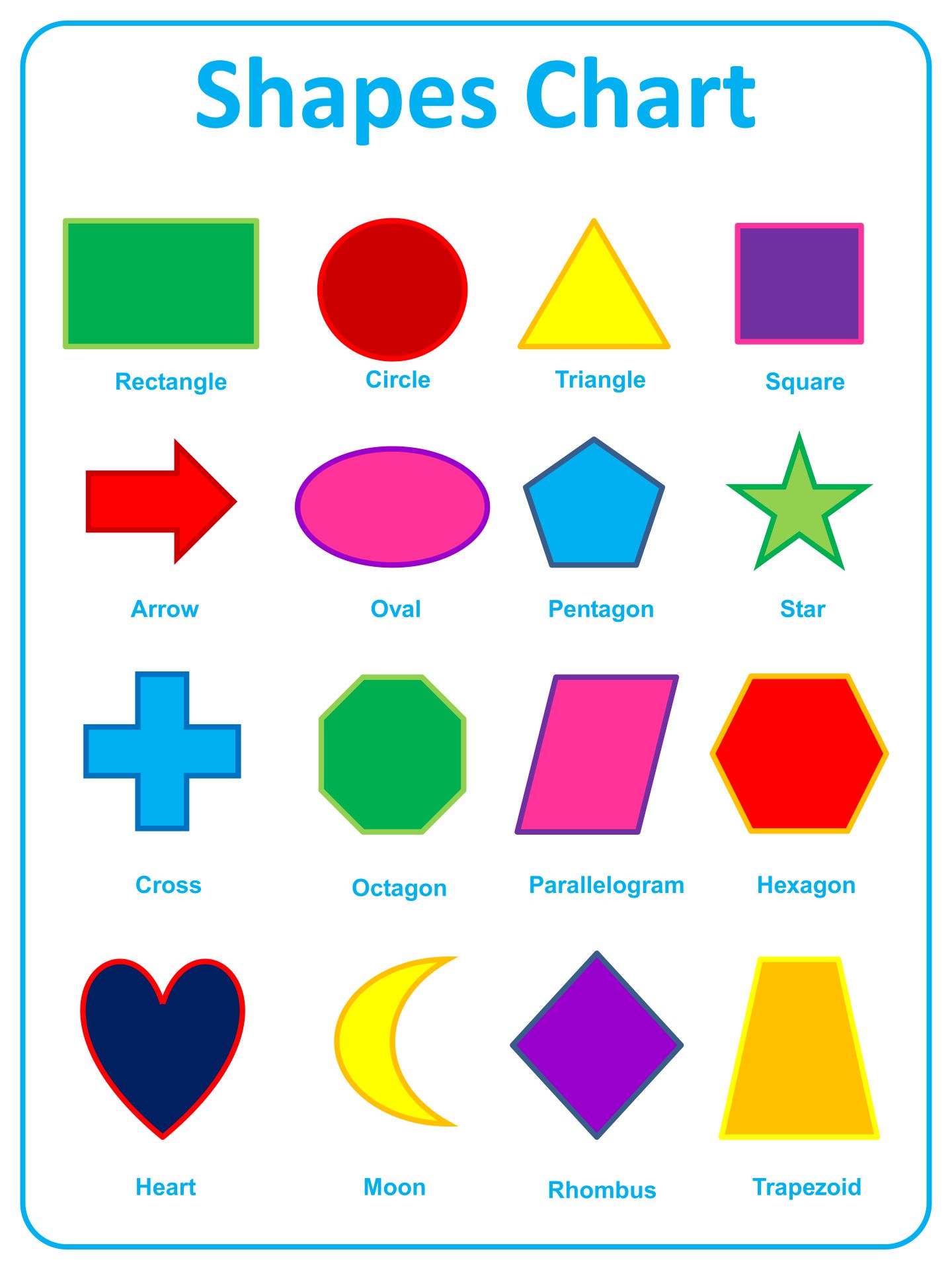

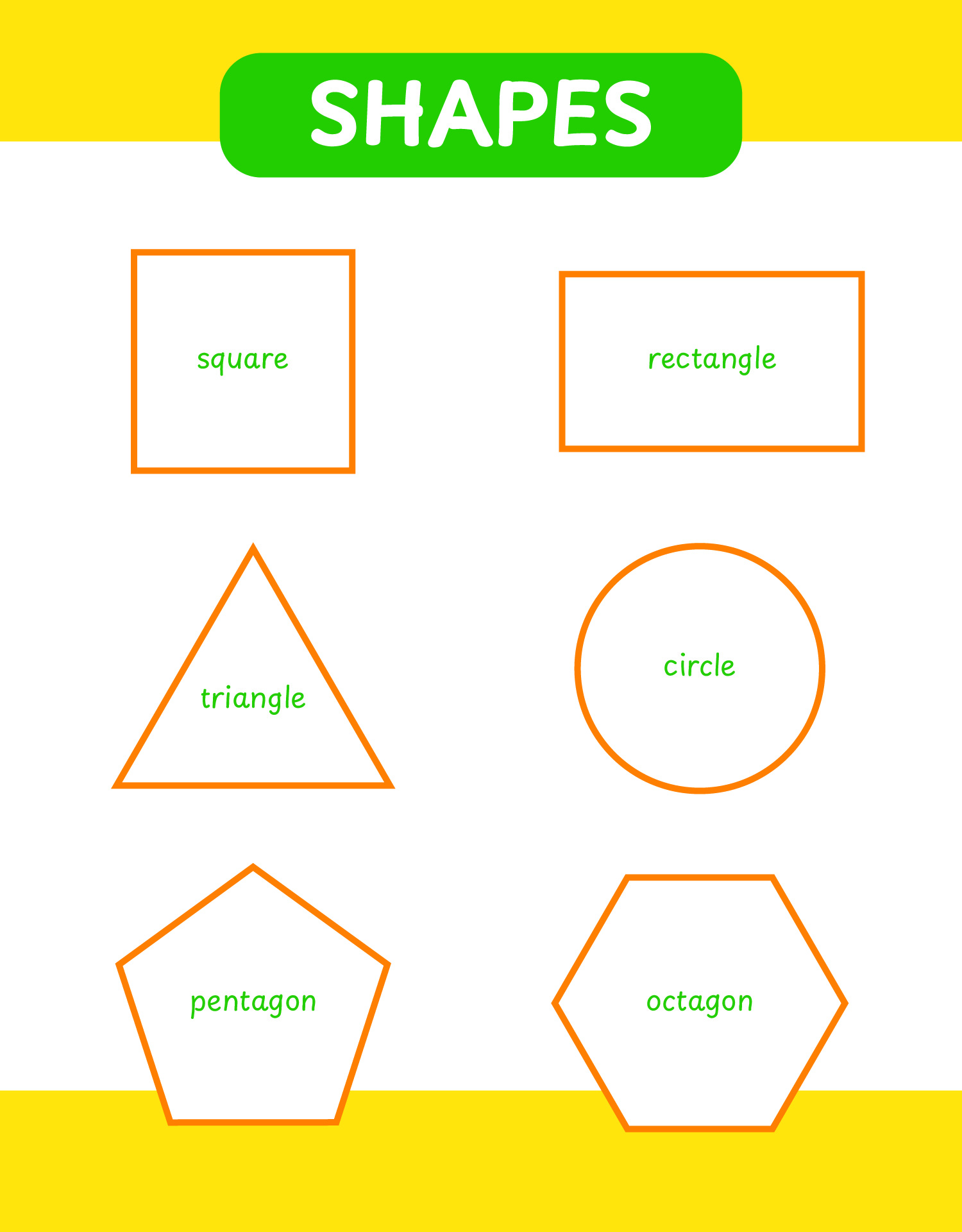

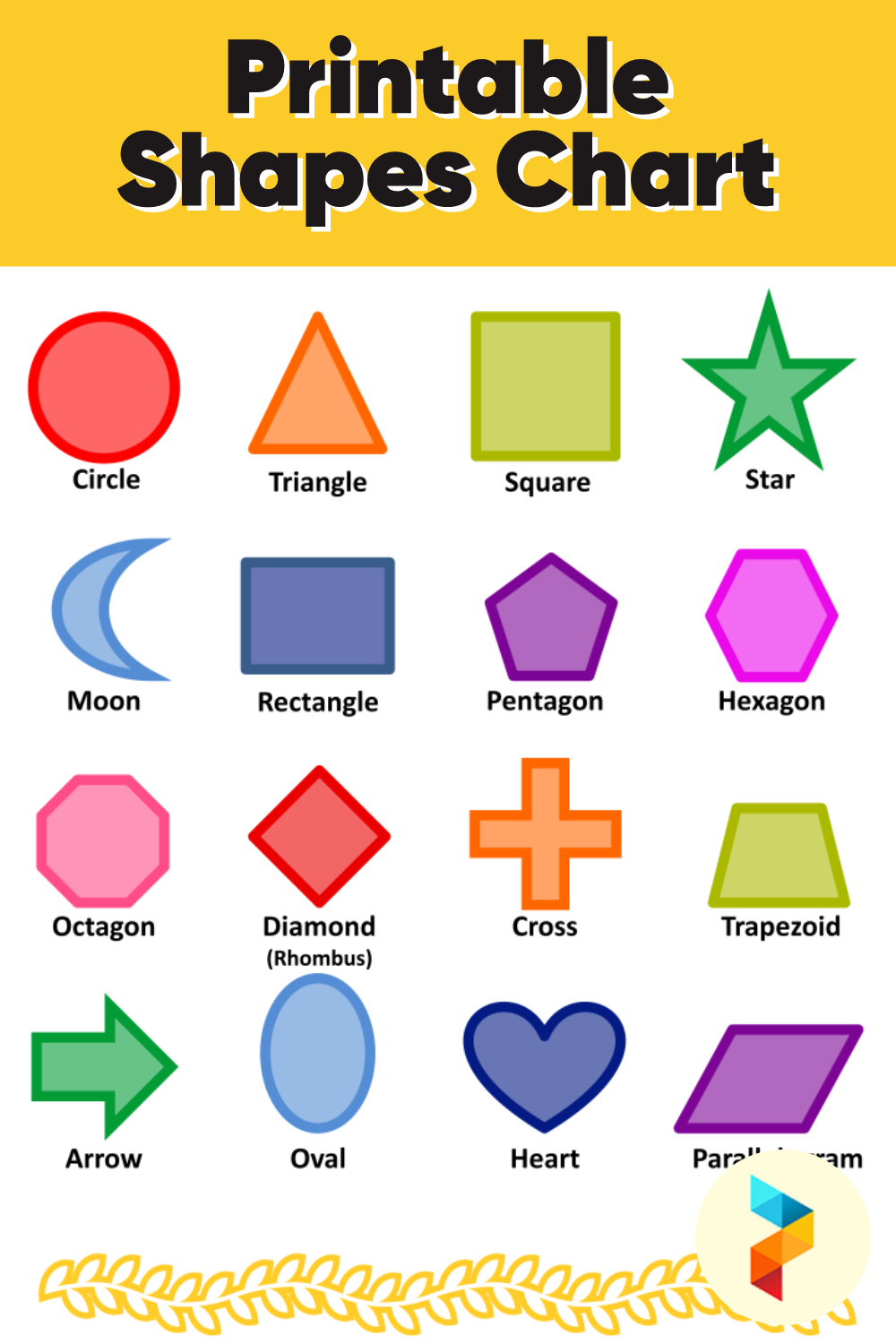

Printable Shapes Chart

Shapes Chart 10 Free PDF Printables Printablee

Shape Chart Printable Printable Word Searches

Explaining Machine Learning Models A NonTechnical Guide to Interpreting SHAP Analyses

Printable Shapes Chart Printable Word Searches

Summary plots for SHAP values. For each feature, one point corresponds... Download Scientific

Printable Shapes Chart

10 Best Printable Shapes Chart

Feature importance based on SHAPvalues. On the left side, the mean... Download Scientific Diagram

Set The Explainer Using The Kernel Explainer (Model Agnostic Explainer.

We Start With A Simple Linear Function, And Then Add An Interaction Term To See How It Changes.

They Are All Generated From Jupyter Notebooks Available On Github.

Uses Shapley Values To Explain Any Machine Learning Model Or Python Function.

Related Post: